The Confidence Calibration Problem in Generative AI Document Processing

Since we introduced LLM-based key value extraction and highlighting in documents, this capability has become exceptionally popular among our users.

I find myself using it constantly as well.

Tuning an efficient prompt is faster (and more enjoyable) than labeling dozens of documents to train a traditional machine learning model, and the precision is generally quite satisfactory.

However, there’s a significant technical challenge that becomes apparent when examining the confidence factors associated with LLM-captured key values: these models consistently overestimate their confidence scores.

Most True Positive values (fortunately the majority) receive confidence scores of 100% – but so do the False Positive values. This pattern holds across every LLM we support on our platform: Llama, ChatGPT, BERT, and others.

This overconfidence renders traditional confidence-based filtering ineffective.

The standard approach of setting confidence thresholds to discriminate between True Positives and False Positives – a method that works excellently with traditional machine learning models – simply fails with generative AI.

The Root Cause: Generative vs. Discriminative Models

When I asked Amit, our Chief Data Scientist, why generative AI excels at information capture but struggles with confidence evaluation, his response was characteristically insightful: “Henri, it’s because LLMs are generative models, while traditional ML models are discriminative.”

This distinction is fundamental to understanding the confidence scoring problem. The issue isn’t specific to particular use cases – it’s architectural.

LLMs are trained on vast text corpora using self-supervised learning, recognizing patterns in word usage and context with minimal human guidance.

When generating responses – even simple keyword detection tasks – the model doesn’t evaluate factual correctness.

Instead, it produces the most statistically likely response based on observed training patterns.

The only validation mechanism is checking whether the response matches the prompt criteria.

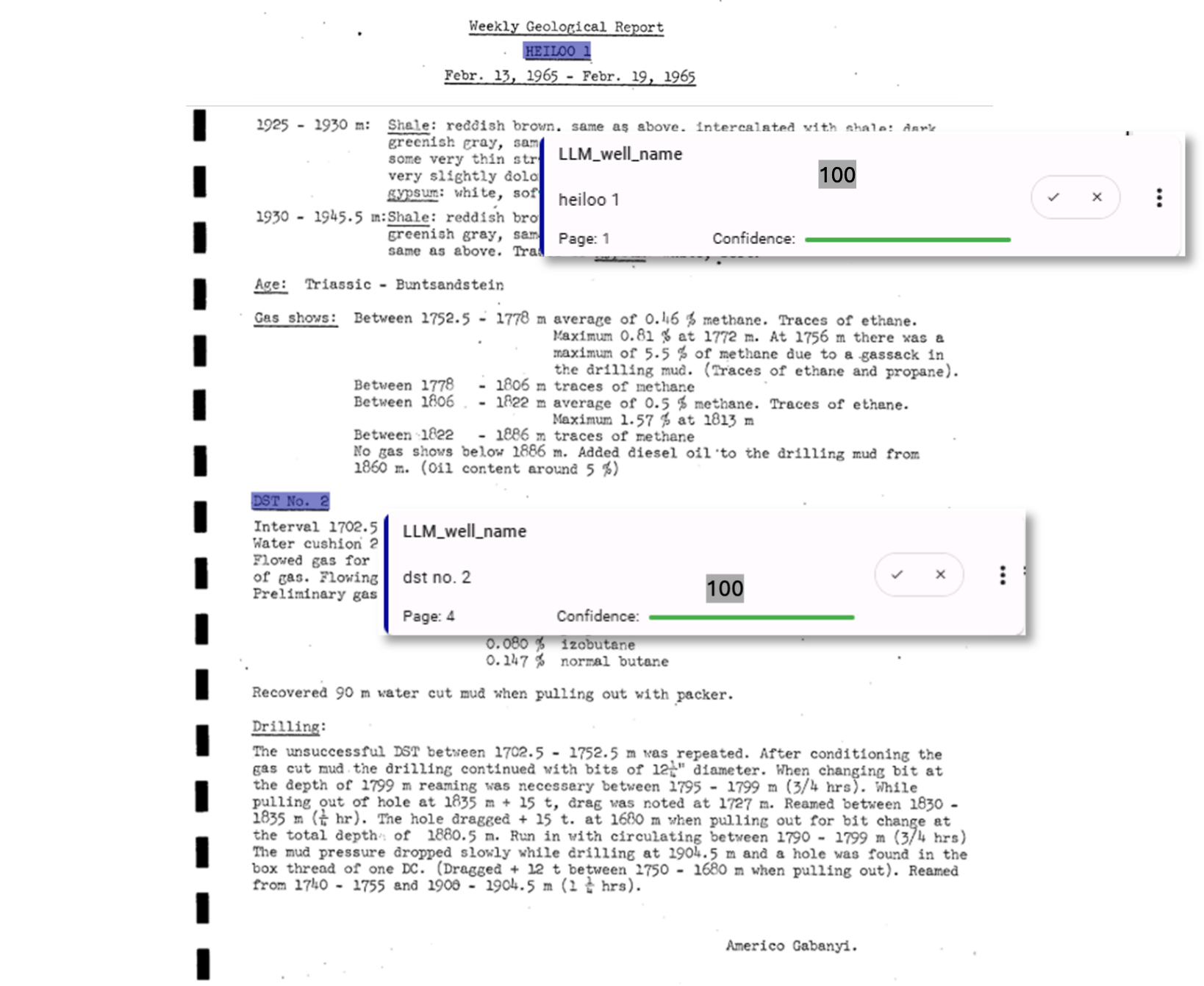

Technical Example: Well Name Detection

Consider this prompt designed to detect well names in Dutch North Sea drilling reports:

What are the names of the wells of the document? Frequently, they are specified as a string starting with a letter or a number or the name of the location, followed by a separator that can be a “/” or a “-“, followed by a number or letter. Do not capture strings that are part of larger strings, but only capture whole strings separated by white spaces. Provide the name of the well and a confidence factor.

When applied to a drilling report, this prompt detected two entities with 100% confidence:

Figure 1: Two well names have been detected and scored at 100% by a LLM with a prompt defined by the user.

The first detection, “HEILOO 1,” is correctly identified as a well name despite limited contextual clues – an impressive result. However, “DST No. 2” (Drill Stem Test No. 2) is a gas test procedure, not a well name, yet it also receives 100% confidence.

The overconfidence occurs because “DST No. 2” perfectly matches the structural criteria: starts with a letter (“D”), followed by letters/numbers (“ST No”), followed by separator and numbers (“.2”). The LLM has no mechanism to evaluate semantic appropriateness beyond pattern matching.

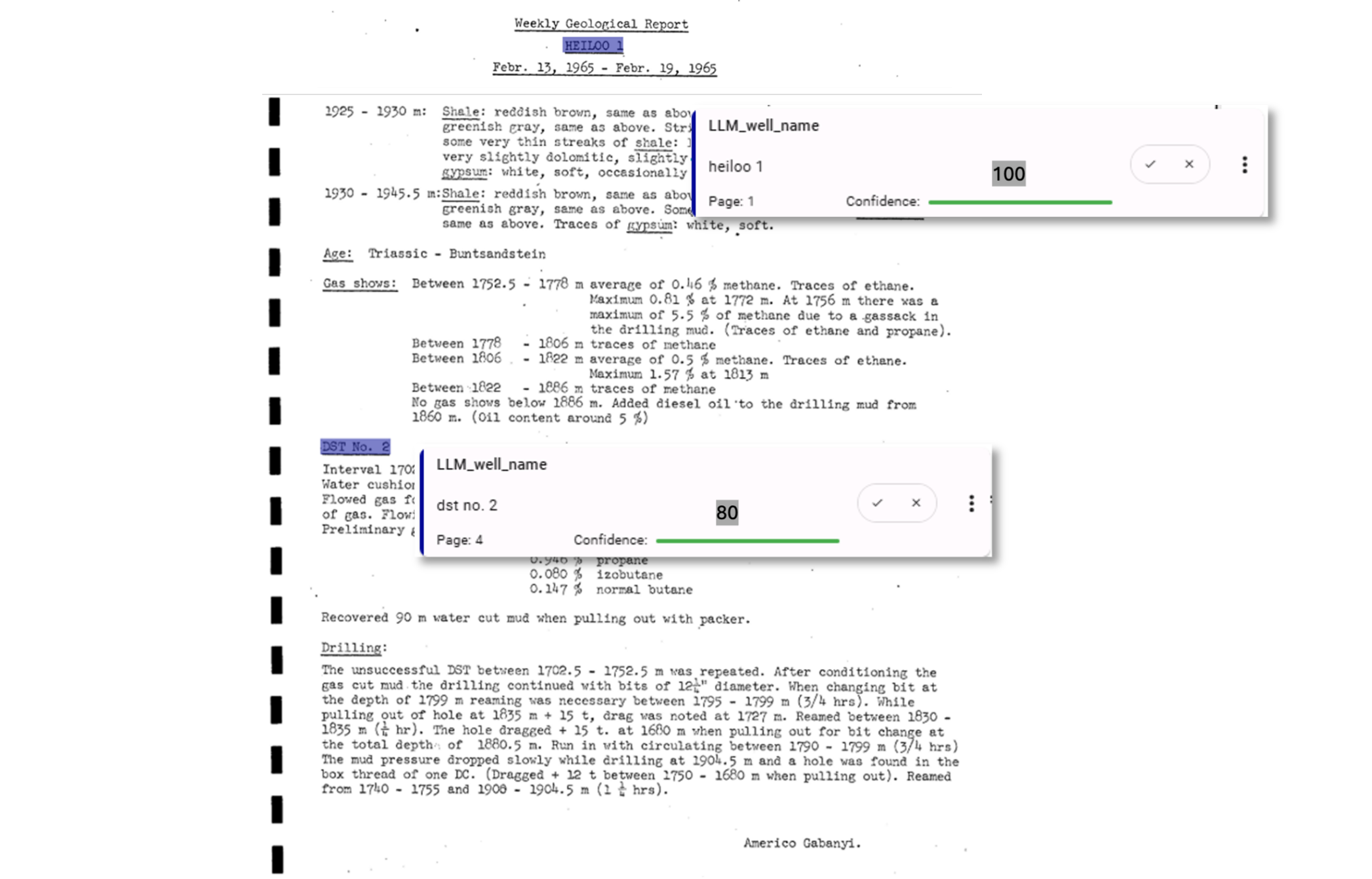

Prompt Engineering Improvements

One approach to address this involves enhancing prompt instructions for confidence evaluation:

What are the names of the wells of the document? Frequently, they are specified as a string starting with a letter or a number or the name of the location, followed by a separator that can be a “/” or a “-“, followed by a number or letter. Do not capture strings that are part of larger strings, but only capture whole strings separated by white spaces. Provide the name of the well and a confidence factor. The confidence factor will be evaluated using the structure of the detected well name and the text surrounding the name of the well.

This enhanced prompt produces marginally better discrimination:

Figure 2: Improving the prompt may help to improve the confidence score associated to the detected value (80 instead of 100)

While the False Positive confidence drops to 80%, this improvement is insufficient for reliable threshold-based filtering.

AgileDD’s Technical Solution: Hybrid Architecture

This is where AgileDD’s human-in-the-loop architecture provides a significant technical advantage.

Our platform enables authorized users to validate LLM-captured values, marking them as correct or incorrect. These human annotations become training data for a secondary machine learning model or small neural network specifically designed to score captured values using contextual features and accumulated user feedback.

This creates a powerful hybrid architecture:

Stage 1: LLM Extraction

- High recall capture of potential key values

- Pattern-based detection with broad coverage

- Fast processing of diverse document types

Stage 2: ML Discrimination

- Trained on human-validated examples

- Context-aware confidence scoring

- Precision-focused filtering

Stage 3: Continuous Learning

- User feedback integration

- Model refinement over time

- Domain-specific adaptation

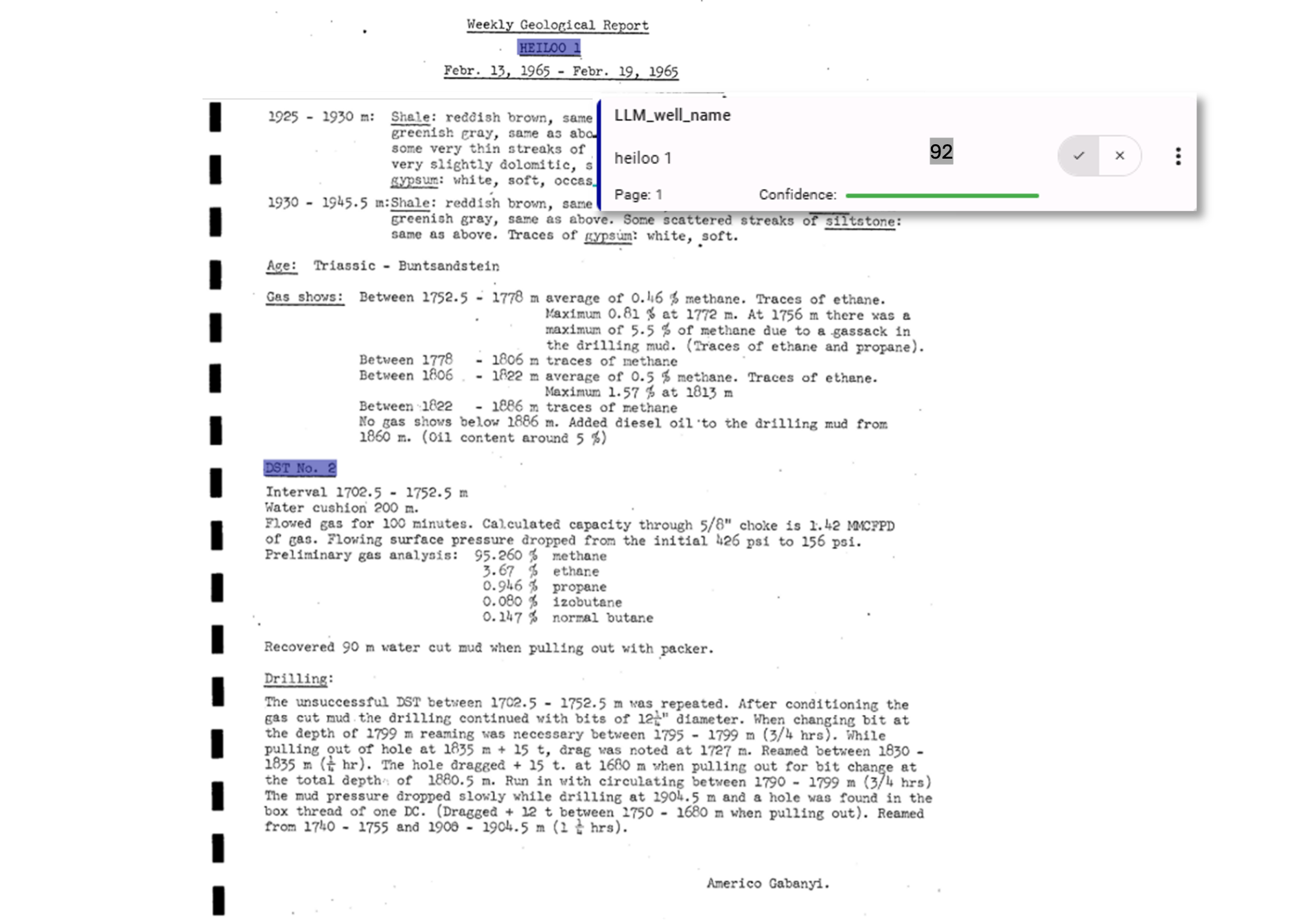

Results: Improved Confidence Discrimination

The hybrid approach produces significantly more accurate and discriminative confidence scores:

This confidence separation enables effective threshold-based filtering, allowing users to automatically discard low-confidence extractions while preserving high-quality results.

Technical Architecture Benefits

AgileDD’s approach leverages the complementary strengths of different NLP techniques:

- LLMs: Excellent pattern recognition and broad coverage (high recall)

- Traditional ML: Superior discrimination when trained on domain-specific examples (high precision)

- Human expertise: Essential for ground truth validation and edge case handling

This hybrid architecture addresses the fundamental limitation of generative models while preserving their processing advantages. The result is a document intelligence system that combines the speed and flexibility of LLM-based extraction with the reliability and precision that enterprise applications require.

The Path Forward

The overconfidence problem in generative AI isn’t a bug to be fixed – it’s an architectural characteristic that requires thoughtful system design to address effectively. Understanding this limitation has profound implications for anyone building production document intelligence systems.

Organizations rushing to implement LLM-based extraction without accounting for confidence calibration issues will face significant challenges in production environments where precision matters. The temptation to rely solely on generative AI’s impressive pattern matching capabilities overlooks the critical need for validation mechanisms.

At AgileDD, we’ve learned that the future of document intelligence lies not in choosing between generative AI and traditional machine learning, but in architecting systems that harness both approaches strategically. The human-in-the-loop isn’t just a safety net – it’s the feedback mechanism that transforms overconfident pattern matching into reliable, production-grade document intelligence.

As we continue refining this hybrid approach, we’re seeing increasingly sophisticated applications where LLM extraction feeds specialized ML models trained on domain-specific validation data. This evolution represents a maturation of AI-powered document processing from impressive demos to dependable enterprise solutions.

The next time you see an LLM confidently extracting information from your documents, remember: that confidence score tells you more about pattern matching than factual accuracy.

The real intelligence lies in knowing when and how to validate those confident assertions.